UnityNextSpec

Unity8 (f.k.a. Unity Next)

Launchpad Entry: client-1410-unity-ui-shell

Created: Kevin Gunn

Contributors: Thomas Voß, Michał Sawicz, Kevin Gunn

Motivation and Summary

In 2010, Canonical created the Unity project as part of Ubuntu, aiming for a consistent and beautiful session-level shell implementation. From the very beginning, Unity's concepts were tailored with a converged world in mind, where the overall system including the UI/UX scales across and adapts to a wide variety of different form factors.

Back then, the first concrete form factor to see an implementation of Unity was the classical desktop, with an implementation for TV sets being made available, too. With the Ubuntu Touch project being revealed to the public, powered by an implementation of Unity and its concepts interpreted towards mobile form factors, it is time to start working towards a truly converged Unity implementation, with one implementation supporting all of the different form factors. For this, the Unity8 project is the logical evolution of what was started for the desktop, transitioned to the TV and propagated for the phone and the tablet. It unifies the efforts spent on Unity in general and aims for convergence into one deliverable for all form factors: phones, tablets, TV's and desktop.

Note, the original project reference for Unity8 was UnityNext. As the project progressed, the name UnityNext has been replaced with versioning references. To help understand versioning and references to Unity are as follows:

- Unity7 is used in desktop releases based on nux and can be found by default through 14.04

Unity8 is used in Ubuntu Touch since the beginning, is based on Mir and is the convergence target beyond 14.04

To be clear, the same concepts described as the original Unity are still valid. Unity will still be a shell, with a launcher, indicators, switcher, dash etc. Many of the UI design concepts are effectively applicable to Unity Next with recent additions of stylistic improvements, which have been born out of the Ubuntu Touch Developer Preview effort, being extended by new key features such as the notion of the "side stage".

With convergence in mind, we also revisit our choice of technology. While the desktop version of Unity has been built on top of Nux, an attempt at an OpenGL-only toolkit, all of the other incarnations of the Unity shell have been developed on top of Qt/QML. In the past, we have chosen Nux over Qt/QML (version 4.x) to address our requirements for the ability to provide sophisticated UX and effects by leveraging pure OpenGL whenever necessary. However, with Qt5/QML2, we no longer see the need for a custom OpenGL-toolkit, as Qt5/QML2 allows us to easily access raw OpenGL features and performance. More to this, our experience with Qt5/QML2 during the Ubuntu Touch development was very positive, and we feel confident that it allows us to deliver a sophisticated UX across all form factors. Unity Next was developed largely using Qt Modeling Language (QML) on Digia's Qt framework.

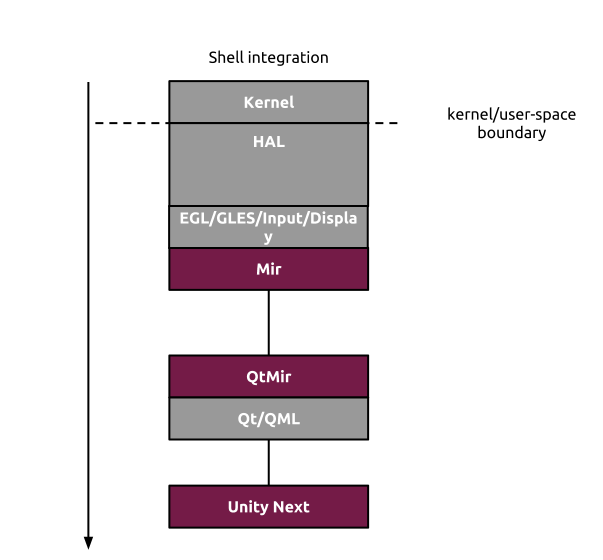

With this particular shift in focus to Qt, it provides an opportunity to also revisit our approach to window management and composition. On our Ubuntu Touch Developer Preview we have initially relied on some modifications to the Android facilities. However a solution is needed to fulfill our Unity Next "look and feel" requirements across all devices, phone to desktop to TV. The solution decided upon is a related Ubuntu project known as Mir.

Unity Next will focus on two large efforts on the path to delivering an entry level Ubuntu Phone and eventually cross device convergence:

Unity Next integrated on top of Mir

Unity Next UI implemented on Qt (note: much has already been included in the Ubuntu Touch Developer Preview)

Goals and Objectives

The ultimate goal of the next generation of Unity is to fulfill the following three requirements:

- Seamlessly scale across and adapt to multiple form factors, taking into account device specific properties and constraints and optimizing for them.

- Carry Unity’s visual design language and identity across the different form factors and present users with a friendly, well-known and consistent user experience no matter what Ubuntu-powered device they are using.

- Support the idea of a converged device that enables a user to rely completely on a mobile device as his/her primary computing environment by enabling the shell/system user experience to dynamically adapt to different usage scenarios, e.g., morphing the shell to a full-blown desktop shell when being connected to an external monitor and external input devices or to the Unity TV interface when being connected to a “big screen”.

Translating this to a more technical level, the next generation of Unity needs to take the following requirements into account:

- Be efficient and very conscious of resource usage: While the Unity shell experience is the most prominent user facing element of Ubuntu, it must use as little resources as possible to carry out its tasks. In particular, memory, cpu and gpu usage should be as low as possible to make sure that the majority of computing power on a device is available to applications/the user.

- Be aware of the environment: Unity has to be aware of the context it is running in and respond to any changes in this respect with a user visible transition that gives the user a feeling of responsiveness and control. That being said, Unity needs to deeply integrate with device/form factor specific technologies and capabilities such as sensors to make sure that its state is always consistent and meets the user’s expectations.

- Be unified: Given the diverse set of environments that Unity should be able to run in and transition between, the overall architecture, codebase and underlying design principles should aim for as much unification as possible to keep the system maintainable and predictable. More to this, the more uniform the implementation the easier it will be to test whether the system fulfills our requirements.

- Be unique: As much as we would like to unify Unity’s codebase, we have to be aware of the fact that certain components of the system will be very form factor/device specific. Even more so, certain parts of the system have to be specific to make sure that Unity operates as efficiently as possible. However, the next generation of Unity should be very explicit about what is device/form factor specific and both architecture and implementation should treat those parts as a detail of the implementation. This further helps in testing the system, rendering it as efficient as possible and in providing a maintainable and extensible setup that allows us to evolve the system in a controlled way.

Scope

Note: the Unity Next project does not encompass Core Applications for Touch

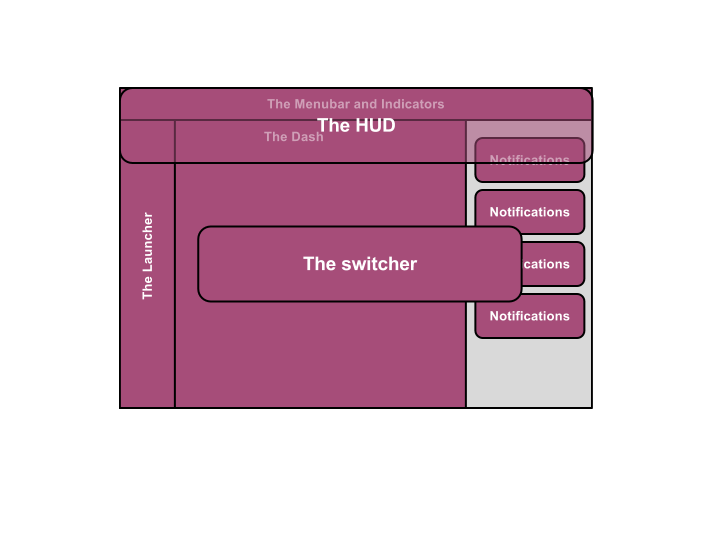

Unity Next from a project perspective is about completing the shell UI implementation on Qt & integrating on top of Mir. Unity Next consists of effectively the same components as previous Ubuntu Unity releases, as outlined in the following diagram (please note that the spread component is not depicted for the sake of keeping the diagram readable):

note: the term Panel is also used to refer to the component Menubar

High-Level System Architecture

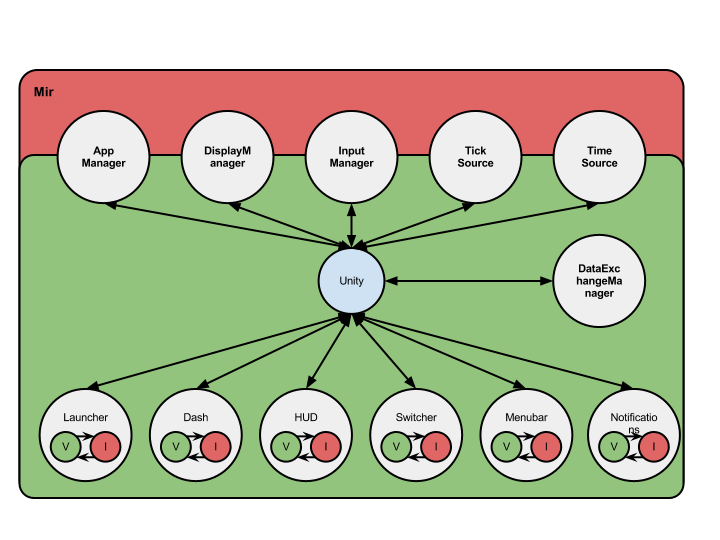

This section defines Unity’s tasks and design with respect to managing the shell components introduced before and its scope with respect to integrating with the rest of the system. Please note that we focus on the main areas of functionality here, and do avoid too much detail for the sake of presenting a consistent and easy to understand overview. Given this (overly) simplified view of the world, Unity is essentially a state machine that is composed of the shell elements as sub-states. More to this, Unity is event-driven and alters the state of its subcomponents as a reaction to arbitrary incoming events. In this respect, events can either originate from input devices, timers, animations or the surrounding system. In essence, Unity is a controlling instance and takes care of synchronizing any required state transitions.

Persistence of Visibility

The state of the launcher and the menubar contains another pair of state, modelling whether these elements are hiding automatically or whether they are pinned and do not alter their state of visibility automatically. In case of both the launcher and the menubar, if their state is set to auto-hide, these elements are drawn overlayed on top of application surfaces. In any case, the flags need to be maintained per application instance and surface to allow a user to select on a per-application basis.

Next, we need to explore Unity’s scope with respect to application/window management and how it needs to be able to define behavior for focus selection, window placement and event propagation.

Application Management

Much as in its current state, Unity considers the notion of an application as a first class citizen. That being said, Unity extends the classic notion of window management to a unified view in that every application instance contains 0 or more windows, where a window is a surface on screen that can be focused and receive input events. Given a so-called application manager, Unity keeps track of running applications, which application is currently focused and relies on the application manager to query further details on any given application instance. More to this, Unity needs to be able to interact with the application manager:

- to switch focus

- to unfocus all applications

- to quit an application instance

- to start an application instance

- for visualization purposes

To implement the HUD functionality, Unity needs to be able to query any actions associated with an application instance, and be notified if these do change.

Input Event Filtering

Unity in its role as the shell needs to be able to intercept the event stream before any sort of input event has been dispatched to ordinary applications. More to this, Unity needs to be able to consume input events and prevent those from being propagated further to either system level components or to ordinary applications. To this end, Unity needs to know about a so-called InputManager that allows for registration of input filters.

Display Management

Managing multiple monitors will be an essential task of Unity in that it is responsible for presenting a user with ways to specify monitor arrangement, display mode (clone vs. multiple desktops) and a primary display. To this end, Unity needs to be provided with a component, we call it DisplayManager, that is responsible for detecting any changes to the current monitor/display configuration and to switch on/off specific displays. Please note that this component should also report changes in orientation to the display.

Drag’n’Drop and Copy’n’Paste

Inter- and intra-application data exchange is an important part of the overall shell user experience. Here, we normally do consider two different mechanisms to initiate the data exchange and for identifying data source and target, i.e.:

- Copy’n’Paste: Data source and destination are identified by focus and copy and paste operations are triggered by applications which in turn are triggered by a user action.

- Drag’n’Drop: A user selects data on a focused application which then becomes the data source and moves the data to be exchanged to the data destination by dropping the data to the destination window.

The overall operation can be split in two steps: First, the user specifies the data source and the actual data to be exchanged, and second, the user identifies the data destination. Once these steps are complete, the actual exchange operation starts, including format negotiations between source and destination and actual data exchange in the case of drag’n’drop. For copy’n’paste, a decoupled model will be deployed, without any sort of communication between source and destination. From Unity’s point of view, the actual exchange mechanism is unimportant and it only cares about the first two steps carried out by the user. To support these steps, we introduce a DataExchangeManager that abstracts away the implementation details and provides Unity with functionality to keep track of current operations, where each operation is identified by a source and a destination.

Unified Tick and Time Sources

Unity heavily relies on animations and smooth transitions to provide an appealing and responsive UX to users. To make sure that animations and transitions are perfectly synchronized with the rest of the system, Unity needs to know about a tick and a time source that is valid for the overall system. Both should be abstracted to account for future changes and to help with testing.

Putting together what we have said so far in a diagram, the inner core of Unity becomes well-defined functionality-wise. For the components described before, implementations are provided by Mir and we achieve a very tight integration of the shell that in turn allows us to deliver a shell implementation that fulfills the requirements stated in the very beginning of this document. However, as we have clearly stated our expectations regarding the components provided by Mir, we can still test both Unity and Mir in isolation in a very efficient and straightforward way and only need to consider sophisticated full-stack testing for any remaining integration issues. In particular, Unity Next will rely on and have exclusive access to the rendering capabilities offered by Mir.

In this respect, Mir acts as an abstraction layer that allows Unity to easily move across different form factors.

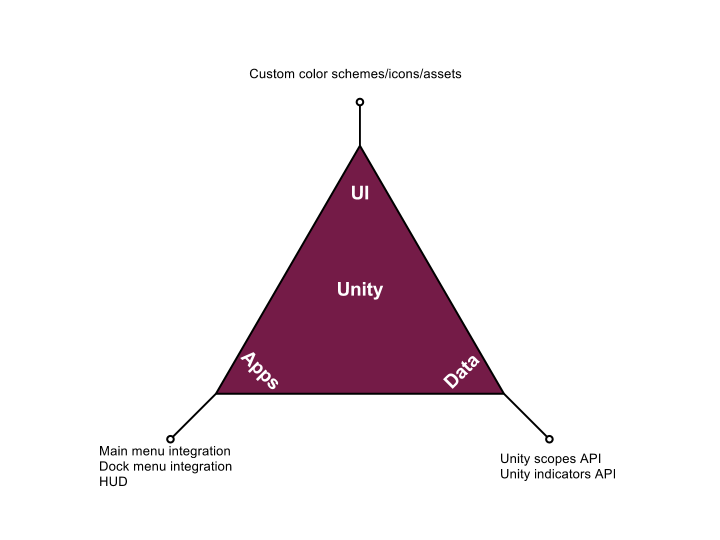

Unity Integration Points

Applications are offered with the following means for deeply integrating with the Unity shell:

Main Menu Integration

- Applications describe their main menus to the shell in a hierarchical model that allows for flat and nested menus. This description is used in multiple ways:

- The menus are presented to the user in the menubar on the top edge of the screen whenever the user expresses the intent to reveal them.

- The menus are presented in the window titlebar of an application’s surface whenever the user expresses the intent to reveal them.

- The menus are parsed and processed by the HUD service to make them accessible to search operations executed via the HUD.

Launcher Menu Integration

Applications can provide the shell with a way to describe the menu that should be displayed on an application’s dock icon. This menu is displayed next to the dock icon whenever the user expresses the intent to reveal them. As with the main menu, the respective actions are parsed and processed by the HUD searches for later search operations executed via the HUD.

Fullscreen Preferences

An application is provided with means to inform the Unity shell about its preference when switching to fullscreen mode. At this point, an application can inform Unity on a per-surface basis if the panel or menubar at the top of the screen should auto-hide or be always visible and whether the application can account for an overlayed panel or menubar and whether it needs to be resized.

Stage Hint

The Unity shell offers multiple “stages” that an application can be run in. Here, a stage corresponds to a certain area of the screen together with stage-specific interaction schemes, stage-specific focus semantics and stage-specific display constraints. Applications will be able to transition between different stages and can express their capabilities in this respect.

Unity Extension Points

The Unity shell offers two main extension points for developers and customers, i.e., custom indicators and custom scopes. Custom indicators allow for providing quick access to specific functionality while custom scopes allow for surfacing specific content to the user.

Unity Scopes API

A Unity scope is an entity that renders a certain data source searchable and accessible to the Unity dash. More to this, it allows for surfacing content to the user, where content is consciously kept broad. It could be music, videos, pictures, applications, weather forecasts or something completely different. To achieve this goal, a scope provides means for querying the data source, returning search results in a well-defined schema that is shared with the Unity dash and its lenses. Search results from multiple scopes are then post-processed, rearranged and finally presented to the user.

Unity Indicators API

In Unity shell UI, an indicator graphically represents the state of an application or subsystem, e.g. network status. Indicators are icons or labels that have a menu associated with it. The menu provides more specific status information as well as commonly-used actions to change the state, e.g. connection to a wifi network. Depending on the form factor, indicators are displayed by some kind of panel or menu bar in the shell. However, the actual logic for an indicator resides on the subsystem or application side in this context called indicator service provider. Unity exports the service data across the process boundaries into the shell UI and renders the menus. One single indicator service might export multiple indicators.

Roadmap

May 2013

COMPLETE: Target of having a first level/functional integration of Mir & Unity8. While complete this integration happened in midsummer rather than May.

October 2013

COMPLETE: Target of having a Mir-Unity8 code base that could be taken to product on a Phone.

April 2014

Target of enabling tablet form factor features for Unity8. Begin laying the foundations of features for full convergence.

October 2014

Harden features for phone, complete lingering tablet features and lay the foundations for a fully converged code base delivering Unity8-Mir based Ubuntu across smartphone, tablet, tv & desktop.

Beyond October 2014

Target a fully converged code base delivering Unity8-Mir based Ubuntu across smartphone, tablet, tv & desktop.

Blueprints

It may be helpful before going further to visit the project and look at the Dependency Diagram towards the bottom of the topic-u-unity-ui blueprint, which essentially reflects all work feeding into achieving convergence. This is currently planned out for our 14.04 release.

Current Blueprints

Unity8 Development

Launchpad Entry: client-1410-unity-ui-shell

Overview: Development of full shell features based on design team input

Goal/Deliverables: Deliver the Unity8 Ubuntu experience

Full spec: none

Depends on:

Historical

Unity8 Integration with Mir

Launchpad Entry: client-1404-unity-ui-shell

Overview: the Unity8 user experience

Goal/Deliverables: A basic Unity shell on Mir with both phone and tablet user experiences

Full spec: none

Depends on:

Unity8 Integration with Mir

Launchpad Entry: client-1303-unity-ui-iteration-0

Overview: Integrating Unity Next on to Mir

Goal/Deliverables: A basic functioning Unity shell on Mir, good enough to continue development towards a phone product

Full spec: none

Depends on:

We will know we have finished when Unity shell is using Mir as a session compositor and input dispatcher

Unity8 Quality

Launchpad Entry: client-1303-unity-ui-iteration-0

Overview: Much of the Unity Next from Ubuntu Touch code base needs to have testing introduced, some generic code review/cleanup

Goal/Deliverables: Introduce testing for 90% of code base

Full spec: none

Depends on:

We will know we have finished when we have 90% code coverage in testing through Autopilot & introduced benchmark tracking of Valgrind

UnityNextSpec (last edited 2014-06-03 20:13:48 by pool-173-64-197-91)