UECInstallerEnhancement

Launchpad Entry: foundations-lucid-uec-installer-enhancement

Created: 2009-11-25

Contributors: ColinWatson, MattZimmerman, ThierryCarrez, DanNurmi, ...

Packages affected: eucalyptus-udeb

Summary

In the same way that in 9.10 we made it possible to install UEC on two servers, we will support a greater variety of configurations, such as those explained in https://help.ubuntu.com/community/UEC/Topologies. This includes updating the installer to support this.

Release Note

The UEC installer now supports installing clouds with a greater variety of topologies, including multiple clusters.

Rationale

This change expands UEC installation support to cover more sophisticated setups, as well as the simple single-cluster setup supported in 9.10. Multi-cluster support is valuable in more complex environments.

Target topologies

- Multiple clusters

- CLC/Walrus on single powerful machine, plus multiple CCs

- Break up SCs to near file servers; CLC/CC elsewhere

Assumptions

Design

You can have subsections that better describe specific parts of the issue.

Implementation

This section should describe a plan of action (the "how") to implement the changes discussed. Could include subsections like:

UI Changes

Should cover changes required to the UI, or specific UI that is required to implement this

Code Changes

Code changes should include an overview of what needs to change, and in some cases even the specific details.

Migration

Include:

- data migration, if any

- redirects from old URLs to new ones, if any

- how users will be pointed to the new way of doing things, if necessary.

Test/Demo Plan

It's important that we are able to test new features, and demonstrate them to users. Use this section to describe a short plan that anybody can follow that demonstrates the feature is working. This can then be used during testing, and to show off after release. Please add an entry to http://testcases.qa.ubuntu.com/Coverage/NewFeatures for tracking test coverage.

This need not be added or completed until the specification is nearing beta.

Unresolved issues

This should highlight any issues that should be addressed in further specifications, and not problems with the specification itself; since any specification with problems cannot be approved.

BoF agenda and discussion

== Discovery ==

Continue to support Avahi for discovery, but need to support manual entry of CLC location since CCs may not be on the same Avahi broadcast domain

"Who needs to be able to discover whom":

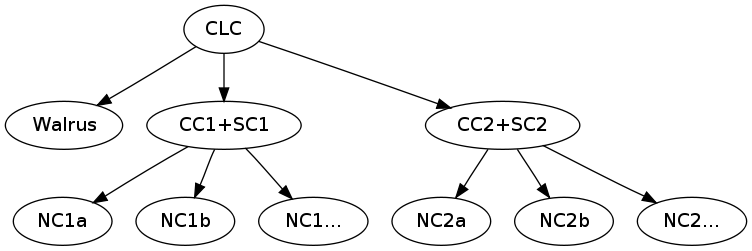

digraph euca {

CLC;

Walrus;

CC1;

SC1;

CC2;

SC2;

NC1a;

NC2a;

CLC -> Walrus;

CLC -> CC1;

CLC -> SC1;

CLC -> CC2;

CLC -> SC2;

CC1 -> NC1a;

CC2 -> NC2a;

}

"Who needs to be able to connect to whom":

digraph euca {

CLC;

Walrus;

CC1;

SC1;

CC2;

SC2;

NC1a;

NC2a;

CLC -> Walrus;

CLC -> CC1;

CLC -> SC1;

CC1 -> NC1a;

SC1 -> Walrus;

NC1a -> Walrus;

CLC -> CC2;

CLC -> SC2;

CC2 -> NC2a;

SC2 -> Walrus;

NC2a -> Walrus;

}

Where does registration need to happen:

* NC needs to be registered on CC

* all other components need to be registered on CLC

* need to know which components belong to which cluster

What should the installer do?

1. discovery

2. registration?

3. push of credential

Installer should do discovery, transfer of credentials and write up config files (including discovered IPs), upstart scripts at boot should complete registration

currently push credentials to NC by way of preseed file created during cluster installation

will need to do this for other remote components (but with a preseed file on the cloud)

hierarchy of components designed around Ethernet broadcast domains, so multi-cluster setups are expected to be in separate broadcast domains while central infrastructure (cloud, walrus) are expected to be in a single domain

Network topology configuration:

* MANAGED-NOVLAN enables all features, but no isolation between VMs

* MANAGED adds isolation

Public/private interface selection bug: https://bugs.launchpad.net/ubuntu/+source/eucalyptus/+bug/455816

Avahi publication on cluster has a habit of picking up public IPs of nodes as well

solution: add TXT record with the IP you're actually supposed to use

Installation order:

1. CLC

2. CC1

3. SC1

4. NC1a

Offer next plausible choices:

* If only CLC installed, offer Walrus or CC

* If CLC and Walrus installed, offer CC

* If CLC and CC installed, offer Walrus, SC, or new CC

* If CLC, Walrus, CC, and SC installed, offer NC or new CC

* ... etc.